AMD’s Bold New Strategy

Last week, NVIDIA unveiled their next-generation flagship GPU the GTX 1080, along with the slightly less powerful GTX 1070. With both of these new GPUs the company has made some pretty outrageous — and if at all true, very impressive — claims in terms of performance.

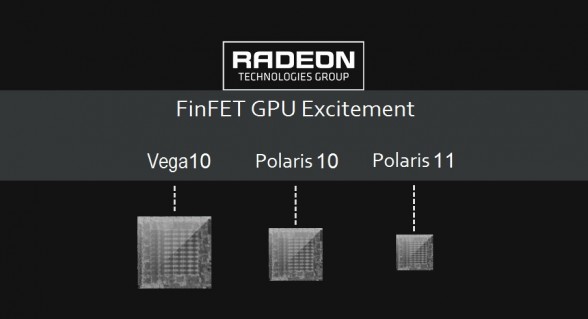

With all the hype surrounding the launch of NVIDIA’s flagship, many people are questioning AMD’s decision to focus on the mainstream desktop and notebook markets with the launch of their upcoming GCN (Graphics CoreNext) 4.0 GPUs, codenamed Polaris 10 and 11.

After all, historically GPU manufacturers are known to release the flagship or ‘high-end’ products first with the launch of a new product generation, with ‘mainstream’ and ‘performance segment’ parts usually following months later, once yields have matured. Seems smart, right? You may even ask yourself.. why fix what isn’t broken? — Well, I’ll tell you why: AMD sees a tremendous opportunity in upsetting the status quo. It’s a very bold strategy, and I believe it’ll pay off, Cotton. Memes aside, I’ll explain why I believe this below.

Mainstream GPUs account for the majority of GPU sales.

While high-end, flagship level graphics cards usually carry the largest profit margins, mainstream and performance segment GPUs account for the vast majority of total graphics card sales. This makes perfect sense when you think about it, after all, most gamers obviously can’t afford to shell out ~$500 for a new GPU every couple of years.

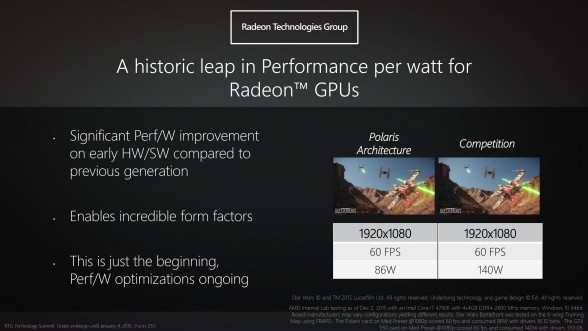

AMD’s Polaris 11 compared against NVIDIA’s GTX 950 in Star Wars Battlefront

Using Steam’s Hardware Survey as an example, we can see that as of April, at least 25% of users who participated in the survey are using a mainstream or performance-segment desktop GPU from the last three hardware generations. When you compare that to the roughly 14% commanded by higher end cards priced between $350-$700 of the same three generations, you start to see why AMD would want to focus on this larger market segment first. It is also worth noting that, these numbers are without factoring in mobile (notebook) GPU users, which would skew the numbers even further in the mainstream direction.

Taking a look at this report from JPR; a computer graphics marketing and management-consulting firm which provides market and competitive analysis. We see that AMD’s discrete GPU sales increased by 6.69% in Q4 of 2015, which also happens to coincide with their release of the performance-segment R9 380X graphics card. In that same quarter, NVIDIA’s desktop discrete GPU shipments were down -7.56% compared to the previous quarter in which it released its mainstream GTX 950. These may look like relatively small bumps, but they provide significant insight into the market as a whole. When you consider that AMD could take roughly 7% of NVIDIA’s sales in a single quarter, with the release of a graphics card in a price segment that NVIDIA had little relative competition offered (save for the GTX 960 4GB, which was already being beaten out by AMD’s R9 380), then you realize what AMD’s plans are.

Competing Without Competition

With NVIDIA focusing on the high-end market first, as usual. It will be a number of months before we see a new performance or mainstream segment GPU from the Green Team. Just looking at the release of the Maxwell-based GTX 900 series which was first introduced with the GTX 980 and 970 In September 2014, it wasn’t until January of 2015 that we saw the release of the cheaper, $200 GTX 960. And, it wasn’t until August of that year when the company released the mainstream targeted GTX 950. It is also much of the same story with the GTX 600 series as well, with nearly six months between initial launch of the of GTX 680 and the cheaper GTX 660 and GTX 650 models.

If history is any indication, we can expect anywhere between three and six months before NVIDIA releases new cards which compete with AMD’s Polaris, giving the Red Team ample time to chip away at a significant portion of NVIDIA’s rather large market share. This could also put AMD in a relatively comfortable position when it comes time to release its flagship graphics card based on the HBM2 powered Vega 10 GPU, which has been rumored to have been pushed forward to as early as October 2016, rather than the original expected launch in 2017.

Vega 10 is rumored to be substantially more powerful than the GTX 1080 and is meant to go toe-to-toe with a possible GTX 1080 Ti or Titan X successor. This could mean that while AMD will initially concede the high-end market to NVIDIA in favor of the larger, performance and mainstream segments, it could be planning to beat NVIDIA to the ultra high-end market, in order to make up the difference. Effectively, AMD would be putting their best effort into avoiding direct competition with NVIDIA altogether, for as long as it possibly can.

Of course, in essence, this plan may not be the best for consumers. Competition in every market segment is always best as it helps to ensure you get the most bang for your buck when purchasing a new product. That being said, you can’t necessarily fault AMD for taking this approach: with NVIDIA currently commanding somewhere between 75 and 80% of the total GPU market share, AMD has to be extra aggressive at taking back significant portions of the market. By avoiding the standard release cycle, AMD sees an opportunity to go after the low-hanging fruit that is the mainstream and performance segments. This should result in significant gains in market share for the company at the cost of some initial mind share by not having a flagship, benchmark busting GPU available to go toe-to-toe with NVIDIA’s offerings.

Thank you for the article. I think you definitely shared a great perspective on AMD’s new strategy. I appreciate it Bud

Glad you enjoyed it, Jimmy!

Ineed, this article is great!

Over at Digitaltrends the 16nm GTX 1080 @ 1.6 GHz only beat 28nm Fury X at 1050 MHz by 13% running DX12 Ashes of the Singularity Benchmark.

A 16nm finfet process, overclocking GTX to run 48% faster than 28nm Fury X and GTX 1080 is only 13% better than AMD’s year old tech? Seriously???

AMD is not giving up much by waiting for October to eat NVidia’s Lunch. For NVidia to respond with a faster GPU would kill sales of GTX 1080. Also the first few weeks of new high end GPU’s are largely vaporware as yields are not yet that high.

Many buyers just might hold off buying GTX 1080 to get the much faster Vega. Especially if the Polaris launch is a success.

13% over last years AMD tech is a very sad disappointment for a NEW DX12 GPU.

I agree that Asynchronous Compute is a great boon to AMD. With the new APIs the Radeon line performs much better and AC really makes them sing. And now sadly comes the but. Games can be fully Directx 12 and Vulkan compliant without it and it is very expensive to implement. Including Ashes there are 4 titles with it. With time tools and techniques get better so it will be more affordable to put into games. But this will take probably a couple of years.

It’s hard to get to excited about AC when I look back at AMD’s track record. Better graphics on APUs, HSA, Mantle. These all were going to save AMD. And now it’s Asynchronous compute. They have done things that make them look great in small niches but in the main street they ended up being good enough and cheaper.

Ok all is not doom and gloom for them. They did pick up a good contract, sold off the last foundry and even picked some grants for technology research. And I’m really hoping they launch a 490x that performs well not just on AC but also DX 11. My 290x is begging to be retired.

Async computing was available in Mantle, it’s not some additional feature they just recently added. The hardware for AC was introduced long ago in the 7900 series, and current consoles. There are very few games that use AC since the industry has been waiting for DX12/Vulkan to finally release. Because it’s a new technology, just as the PS3’s cell, I expected only a selected few to implement this or test in some of their games. More developers will familiarize themselves in properly implementing this into their games if not for more FPS, but certainly more fluid gameplay.

HSA is a whole another talk, consumers don’t need to expect to reach their hands anytime soon anyways. Hardware that supports HSA is still relatively new and software will follow after that. Long ways off, but that hasn’t stopped Nvidia from hiring AMD’s top HSA guy last year. So it certainly isn’t something people should be blowing it off as “another insignificant” tech.

lettuce be reality. Mantle was about AMD vs Intel . . . not AMD vs Nvidia.

And if you look at benchmarks, low level APIs are saving AMD in CPUs in gaming. However it is about 4 to 5 years too late . . .

I’m sitting on a 390x till Vega too imo.

nah 490x wont perform that much more than a 290/x/390/x. The point of Polaris is to bring the power consumption into check so they can develop bigger, better and badder GPU’s for console, laptops and APU’s while still performing well enough to run in mainstream cards. I think Vega will be the one you want to replace it with. I always felt Polaris was never about overall raw performance.

Pascal’s GTX1080 is amazing tech but not in terms of raw performance but performance per watt. Still not the big leap I imagined in my mind. Sad though, I have a factory oc’d R9 290 that would like to be retired too.

Depends is Vega 10 is a Fury X or 7970 family chip.

What if

Vega 10 = 490/490X

Vega 11 = Fury/X successors

Expect Fury X successors to beat Fury X by 35-40%. The card will be at least 70-80% faster than a 290X!

There are 2 possible outcomes I can see. 1 AMD is being silent about their chips because they are $h1tting themselves. Or they are being silent because Pascal doesn’t stand a chance.

It appears you’ve highly underestimated the 1080. First, it is well known Nvida’s gpu’s are basically broken in Ashes of Singularity, second, there are already benchmarks showing the 1080 far surpassing *everything* on the market.

We don’t need to argue though since we’ll be getting more benchmarks than we can handle Tuesday. I hope AMD’s response is good, but, I get the impression the AMD strategy is to try and saturate the market with their Fury line longer meaning the GTX 1070 will be challenging that performance point, and, hammering Fury in terms of price-performance value.

Contrary to what this article says the ball is in Nvidia’s court; only a drastic price cut on their Fury line can save AMD from major market share loss in the performance sector.

One thing is being broken, another is not having hardware support for Async compute. The game runs well on both AMD/Nvidia cards in DX11, but in Vulkan/DX12 is where the similarities end. Only word from Nvidia’s camp is that they are working on a driver to enable Async computing on their cards. I highly doubt Nvidia will come through with such a move. This was just PR talk for controlling the situation, a software implementation (if possible) is far less capable than actual hardware support. Unlike Physx, which could simply be offloaded to the CPU, Async computing cannot be replicated elsewhere. It’s been 8 months when Nvidia stated this and still no sign of Async driver, really disappointing if the whole Pascal line is simply using “brute force” to overcome this issue.

So far the public knows nothing of the performance or prices for the Polaris range. The R9 490 should be going up against the 1070 judging from the previous 390/970 cards. The new line of Fury is expected to start with the 1080 counterpart, again this is judging from the previous line of cards. It’s premature to judge AMD’s Fury line at this point without any official data of their products.

Fury line will fall in price very soon, if P10 is as fast or faster than fury… i hope P10 are faster and cheaper. But know nothing about yet.

Lol, Nvidia’s cards are broken in AoS? NVidia just doesn’t support DX12 features. No big deal DX12 was basically made for AMD cards. DX12 had to come out or Mantle would have taken over as the favoured API. Even Intel approached AMD for Mantle support. Now DX12 is out and comparable to Vulkan/Mantle.

The ball is not in NVidia’s court. People are questioning if they are even a market leader anymore. What have they done for the industry? CUDA, PhysX, G-Sync? Everything they try to do is to lock everyone out. All they want to play is “I win”. You hear more and more about developers moving to support AMD hardware more and more. Rumours of Intel and Apple moving to license AMD Hardware/technologies.

The GTX 1080 is an amazing card don’t get me wrong but more for performance per watt rather than raw power. Thing is AMD know how to get high compute power out of their cards (1080 16nm is a fraction under 9TFLOPS Fury X 28nm is like 8.5 or something). They know how to make their cards for a low level API. They are getting developers on board to leverage their GPU’s peculiarities and now they are learning to make low powered GPU’s. They also have 80 something % of the VR market which is said to become a very fast growing market. The “ball” might be in NVidia’s court but AMD have the ball and are running away with it. See if all this help AMD get some market.

Remember Pascan`t async.

Thats why 1080 is beating furyX only for a 13% margin, as you say.

I’m not so sure about that. In all my research, Pascal seems to support Async. NVIDIA actually adopted some similar methods to that of AMD’s GCN, so while the implementation doesn’t seem to be quite as good as AMD’s.. it is much closer than that of Maxwell.

It seems like NV’s Async is still software-based, though we will see.

It is difficult to say at the moment. I know that Pascal reduces the number of CUDA cores per SM module to 64 (versus 128 on Maxwell) and also claim that Pascal actually supports Compute Preemption. If so, these changes are going in the right direction for a proper Async implementation.

In GP100 they did indeed drop to 64, does not appear so in GP104 (whose SM’s are 128).

In terms of efficiency, this battle is invalid because it uses 16 nm vs 28 nm.

That’s if you were comparing a Radeon HD 7970 (28nm) with a Radeon HD 6990 (40nm).

You are missing the point. I was being ironic.

Nvidia produces a brand spanking new, massively OVERCLOCKED beast with the latest litho process: 16nm finfet, and the BEST that GTX 1080 can do is outperform last year’s AMD front runner 28nm Fury running at just 60% of the GTX 1080 clock by just a measely 13%!!!!!

GTX 1080 is broken running DX12. GTX 1080 should have been at least 30-40% faster on clock speed alone never mind it is 16nm finfet.

How underwhelming.

I know. But what would matter most is if both the 1080 and Fury X were clocked the same.

AMD will blow Nvidia out of the water with its 14nm FinFET processed Polaris chip.

Doubtful. Not the ones launching in the immediate future, anyway. At first I was disappointed to hear the early Polaris cards aren’t going to be fighting in the high-end, but the more I thought about it the more I realized this was actually a very interesting move.

After all they’re targeting my favorite segment with Polaris 10: Mainstream. I mean heck, I usually buy graphics cards in the $200-250 range, and 1070 will likely start life in the $380-400 range. So if Polaris hits hard under $300, it may be within reach for me in the near future. Hopefully they’ll have a slightly cut-down version (non-X) that’s even cheaper, these tend to give the best value on a budget in the mid-range.

Polaris 11 is also interesting because it targets affordable game-capable laptops as well as entry-level discrete cards. If they keep power in check like the early teasers indicate, these will be great for upgrading OEM boxes or older machines for friends and family.

I love it how everyone buys into “GTX 1080 matters” mindset. No it doesn’t. The real bomb is the 1070 because of slightly lesser performance while hugely lower price point. Without 1070 there would be indeed no competition between the two – polaris for mainstream/budget users, 1080 for enthusiasts until even bigger badder cards launch.

But with 1070’s high processing power to price ratio polaris has a competitor. Maybe not a toe to toe given it’s stronger and more expensive, but a lot of players will be lured to shell out for extra power of 1070 with future in mind, given it’s price point is not that far off from medium range, and unlike 1080 it was very good value per dollar.

Unless polaris delivers more then expected (be it power or going way lower then $300), AMD is not off to a smooth start – it will have competition on the market from day one.

Way lower than 300$? Why? The 1070 is 450$, that’s not really medium range, it pretty much is high end. And yes, i know the MSRP is 380$ but i’m ready to bet you that you will not see a single custom 1070 under the 400$ price point, most will be 450$ +/-10%

You have a source or are you just exercising your wikipedia PhD? Either way the OP is right that AMD will have to price these lower than $250 for them to sell or it will just become another disaster just like the Fury lineup was.

A source? Yes, i have one: HISTORY! Custom cards will always be more expensive than the reference ones. Not anymore, since nvidia are asking a 100$ and 70$ premium for reference now (lol !) but it won’t be 380$ either. You should be thankful if you see any 1070 at 400$, most will be at or around 450$ !

No, a source that says they will be priced exactly at $450. Only an idiot would think that non-reference would be cheaper. Geez.

This is pure speculation. There’s an MSRP for a reason. I’d imagine most cards will be at or near MSRP when the partners launch their cards.

The day that custom cards sell for less than a reference card is not upon us, friend. The 1070 will be $450+ and the 1080 will be $700+

Founder’s Edition is not ‘reference’ Yes, NVIDIA does have a reference board which it and its partners will be using. That said, the FE itself is not just a plain old reference card. Its pricing is as it is because of the fact that NVIDIA wants to keep it in the market for as long as the GPUs life-cycle. So, this would mean that pricing it at MSRP would be competing with their own partners.

That paired with the fact that the FE features premium materials and Vapor Chamber cooling, means that yes, you will see cheaper “custom” designs than the FE.

I’ll believe it when I see it. Until then, history says the reference card released by the manufacturer tends to be on the bottom end of the pricing spectrum.

Yes, that is true. However, you’re acting like the pricing for the 1070 is a rumor. It is not. NVIDIA has already stated that the MSRP is $379. Of course, there will be cards that exceed that pricing, but most will be at or around that price (give or take $20).

In 2014, when the 980 and 970 launched, the 980 had an MSRP of $549. Cheapest I found it a few days later, when I picked one up, was $579. It is the suggested price, you usually can’t find anything for that price for a while. Again, we’ll see. I’m obviously not going to complain if the 1080 cards hit the market for only $600. That would be incredible!

Polaris may not be a 1070 competitor. Personally, I hope so, since I’d be in the market for it. However, AMD could be aiming at the large market potential in the mainstream/midrange/whatever you want to call it which is what this article is all about? Having an answer to the 1070/1080 right now might disappoint enthusiasts but having a suite of cards for the sub-1070 market that beats Nvidia’s price/performance is a good strategy.

A very good article but I do two things I disagree with you on. First is if you sell 10 cheeseburgers and make a dollar profit on each and I sell 5 double cheeseburgers and make $2 dollars profit both of us make the same. There is a large market for the $250 cards but the profit margins are larger at the high end.

The second and much larger problem is this strategy works great right up to the moment We read. “Nvidia launches the 1060!” Even if AMD has the whole summer to sell cards in this segment it will only change their market share in tenths of percentages. Global Foundry simply can not make enough cards even if everyone wanted a new 480.

Of course the nightmare scenario is if Nvidia “Furys” the launch. A week before the Fury launched they launched the 980ti. A 1060 announcement would really mess up the AMDs marketing plans. But I don’t think they will. Nvidia seems to be happy with we keep the margins and you can have the volume. But sooner rather than later the 1060 is coming.

True, but in this scenario, Polaris is going to be considerably cheaper to produce than the 1070. P10 is something like ~36% smaller in die size than any of the GP104. This means it will get ~71 more dies onto a 300 mm wafer, and because it is smaller, yields will also be higher (and less substantial).

If AMD sells P10 @ $300, they could make the same profit margin as Nvidia selling the 1070 @ 379.

There’s no reason why GloFo and Samsung together could not make enough chips.

1060 will probably come in July, in which case AMD will have already had a month+ to pump up their product, produce it like hotcakes, and sell it at the price they determine. We still don’t know if the 1060 will use GP104 – but yields would be pretty crap if they did.

To further elaborate:

Assumptions:

– TSMC (and Samsung) 300 mm finFET wafers are around $8,400 (estimated by analyst for TSMC, but I’ll assume Samsung uses around the same price).

– Polaris 10 has a die size of 232 mm^2.

– Yields (used later).

——————————————————————————————–

Polaris 10 can fit a maximum of 251 dies on a single wafer, and GP104 180 per.

I will be optimistic when it comes to yields simply b/c information is scare; apparently a ~100 mm mobile chip was yielding up to 85% for TSMC, but that’s absolutely tiny in comparison to these.

Anyway, I assume P10 will yield 10% better than GP104 given it is so much smaller (and will have more SKU’s: 2560, 2304, and 2048 variants all seem likely, could go down to ~1664 for a mobile chip or something).

If GP104 yields at 60%, 108 chips will be salvageable, with each one costing use under $78 apiece. P10, if it is at 70%, can produce 175 good chips, thus costing $48 each.

Majority of the GP104 sales will go to the 1070, and P10 is harder to decide, but whatever chip closest in price to the 970 is most likely going to be the highest seller.

Actually, we don’t disagree. If you look closely, you’ll notice that I actually stated that high-end cards carry with them higher margins. That’s never something I tried to refute.

Also, yes, of course once NVIDIA launches the 1060, it’ll be a different story. But that’s kind of the point.. they want to have at least a quarter without competing with the likes of the 1060. That would actually allow them to take back significant market share because of the sheer volume of the mainstream segments.

Thanks for reading and for the constructive comment!

What a bold claim. As the article proclaims, from Kepler nvidia has first pursued the high mid range and enthusiast market first(if we ignore the the 750ti). However, since that time nvidia market share has only grown.

You may say that AMD has now open season on the mid range and entry level. But they don’t. They will compete with a high amount of gamers now dumping their 28nm cards including the 960, 970, 980 and 980ti’s on the second hand market as one can already observe in the online classifieds. Polaris will also compete against their own Nano and Fury cards. And what about the new Fury Duo at 1500USD? Complete waste of time and R&D investment.

I don’t believe nvidia users especially the mid range and entry level going to AMD, because of AMD’s weaker software ecosystem. Nvidia simply offers a lot more as a whole. I won’t be surprised if a lot of 750ti owners are now reaping up the 970’s being sold now second hand as the second hand pricing for these cards have plummeted.

You have a valid point. Alot of Nvidia users on Kepler might view the used 970, 980 cards as a chance to move up to a Nvidia architecture (Maxwell) that Nvidia still provides legitimate support for.

However, how long before Nvidia starts gimping Maxwell cards to get these guys on Pascal?

Something all Nvidia loyalists need to keep in the back of their minds.

Nvidia gimping cards is a myth.

People seem to think that adding optional new game features that take advantage of the capabilities of newer hardware is “gimping.” It sure would be if the new game features weren’t optional, but they are and you can turn them off to keep chugging away like you always have.

Personally, I will be keeping my overclocked 980 for a while because it has no problems maxing games like Battlefront at 1080p 120Hz.

Very speculative. I wouldn’t underestimate Nvidia quite so casually. Thinking they are are to passively cede markets is a bit optimistic. BTW the 970 has been a huge seller for Nvidia and that’s still a $300 plus part.

If your R&D team gives you lemons…. make mainstream lemonade.

I hope AMD didn’t forget other gamers who are buying secondhand gpu’s. I’m leaning towards Polaris 10 and what could it offer the fact that i had a Gtx 660 and this upgrade whether it’s Nvidia or AMD is pretty big for me. Prices will drop on used GTX 970 and 980’s in our country once 1070 and 1080 hit the shelves. Im a mainstream gamer and looking for a 200-250 dollars of gpu. So im really hoping this P10 chips are on par with last generation 970 and 980’s for cheaper price and wattage.

Seems most people are looking at 4k/2k gaming and maybe VR when next upgrading GPU.

Nvidia have not really hit the ball out of the park with the 1080 GTX, more a successful bunt.

If the mainsteam Polaris cards get near FuryX performance for half the price they will mop the rest of the market that wants to upgrade and play the latest games at highest settings with decent FPS and get acceptable VR performance without strangling their wallet.

Vega could very well have the 1080 fall on its sword but we will just have to wait and see.

Either way I can’t justify the price of a GTX1080 upgrade, especially with the premium sales founders edition gouging enthusiasts – reminds me of another new tech launch recently hmmm.

I am willing to spend £350+ but I want truly groundbreaking performance improvements for that, true 4k at 60fps, noticebale DX12 improvements, and true future-proof VR performance,

Otherwise I will just get the best bang per buck for a card sitting in the £250 range.

“Last week, NVIDIA unveiled their next-generation flagship GPU the GTX

1080, along with the slightly less powerful GTX 1070. With both of these

new GPUs the company has made some pretty outrageous — and if at all

true, very impressive — claims in terms of performance.”

There you have it! Claims! The 1080 is an awesome product, to be sure. However, the independent benchmarks have proven the BIG claim Nvidia puked up about 2 times the performance of the Titan X. It is just barely 2 times the performance of the GTX 970 and almost 2 times the performance of a GTX 980, but falls woefully short (as expected) of 2 times the Titan X. Don’t say it if it isn’t true. Nvidia lied, plain and simply lied. I have seen the quotes all over the tech community, watched the live stream until I couldn’t take it anymore and watched many of the video after parties hailing Nvidia, King of the Titans… er GPUdom. Can we please dispense with the “new king” hyperbole? I don’t favor Nvidia over AMD/Radeon or vice versa. I favor competition and while I expect some pomp and circumstance, I don’t expect these people to out-right lie about their products. It makes me want to dust off my Cyrix powered Voodoo enhanced PC and play emulated 8bit games.

On the lighter side, thank you. The article was a good read.

Hi, thanks for your comment.

I think I must point out that NVIDIA did only state that the GTX 1080 would perform 2x better than a Titan X in VR applications. This is more or less true as it uses a new single pass rendering technology called “Simultaneous Multi-Projection” which essentially allows it to render the game once and display it to both eyes with proper perspectives.

It is still disingenuous as it could easily be misconstrued, as it was.

Yes, YOU did make that point. It was not my intention to make you the sacrificial lamb of the tech media. I applaud your observations, as very few others took it upon themselves to look past the narrative Nvidia wanted pushed upon the masses. This is the point I attempted to make. Thank you for being patient with my commentary.