AMD’s Zen based server platform, EPYC (codename Naples), has been in the works for quite some time and it finally appears ready for prime time. At AMD’s recent EPYC launch event in Austin, Texas, AMD fully detailed their new server platform designed to challenge Intel’s dominance in the server market.

AMD EPYC 7000 Series Processor

Launching today is no less than twelve CPU SKUs in the AMD EPYC 7000-Series processor lineup. The new CPUs will feature up to 32-cores/64-threads, 8-channel DDR4 memory support (up to 2TB of memory per CPU), 128 PCIe lanes, a dedicated security subsystem, and an integrated chipset.

AMD’s EPYC 7000-Series processors are divided into two platforms: 1P (single socket) or 2P (dual socket).

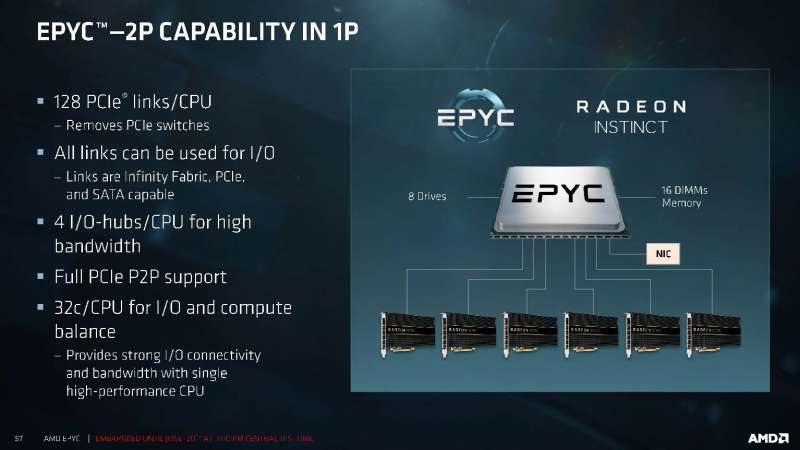

AMD’s 1P CPUs are fairly straightforward. Each CPU features a massive 128 PCIe lanes which can be used for stuff like Infinity Fabric, PCIe, GPU, etc. The flagship 1P CPU, the EPYC 7551P will be a 32-core/64-thread processor with 8-channel DDR4 memory support (up to 2TB). The EPYC 7551P is expected to be priced at an under $2,000 pricepoint which will be a major challenge to Intel which typically prices their 10-core/20-thread Xeon E5 at around $2,000.

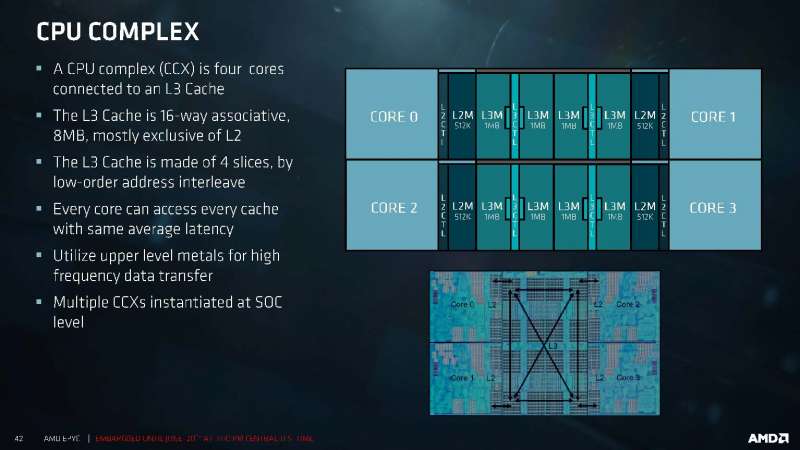

AMD markets their 1P EPYC CPUs as 2P capability in a 1P. The reason for this is because the EPYC processors are actually four 8-core dies with two CCXs each. These are grouped into MCMs (Multi-Chip Modules) which are interconnected by Infinity Fabric. This allows AMD to produce a 32-core chip without actually going through the difficulty of actually producing a single die 32-core chip.

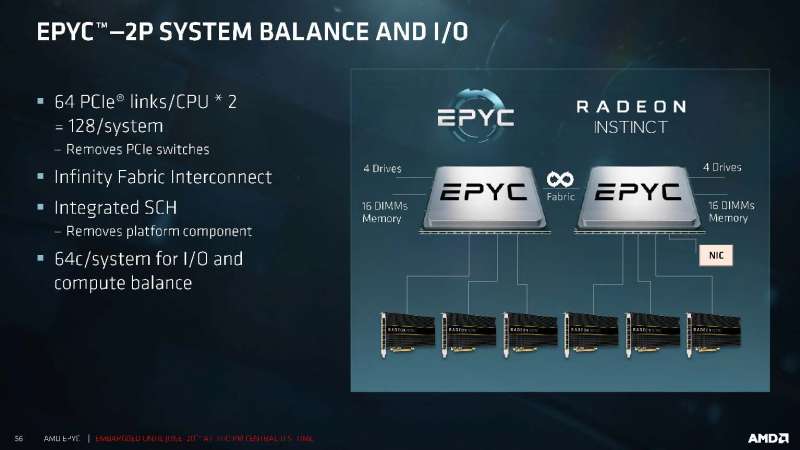

Moving on, the main difference in the 2P configuration is maximum cores now increase to 64 and maximum memory increases to 4TB. PCIe lanes however, are still capped at 128.

The reason for this is because AMD is using the PCIe lanes built into the CPU rather than PLX chips as an interconnect between sockets. In this case, AMD is reserving half, or 64, of the 128 PCIe lanes in each CPU to run in Infinity Fabric mode for processor to processor communications. Since each CPU still retains an additional 64 PCIe lanes, it still provides a total of 128 PCIe lanes for other applications such as graphics cards, storage, etc.

Here’s a full list of the processors in the EPYC 7000-series lineup.

| AMD EPYC Server Processor Lineup | ||||||||

|---|---|---|---|---|---|---|---|---|

| Single/Dual Socket | Core / Thread |

Base Freq | Max Boost Freq | Memory | I/O | TDP | Price | |

| EPYC 7601 | 2P | 32 / 64 | 2.2 GHz | 3.2 GHz | 8-Channel DDR4-2666MHz | 128 PCIe 3.0 Lanes | 180W | >$4,000 |

| EPYC 7551 | 32 / 64 | 2.0 GHz | 3.0 GHz | 180W | >$3,200 | |||

| EPYC 7501 | 32 / 64 | 2.0 GHz | 3.0 GHz | 155W/ 170W |

? | |||

| EPYC 7451 | 24 / 48 | 2.3 GHz | 3.2 GHz | 180W | >$2,400 | |||

| EPYC 7401 | 24 / 48 | 2.0 GHz | 3.0 GHz | 155W/ 170W |

>$1,700 | |||

| EPYC 7351 | 16 / 32 | 2.4 GHz | 2.9 GHz | 155W/ 170W |

>$1,100 | |||

| EPYC 7301 | 16 / 32 | 2.2 GHz | 2.7 GHz | 155W/ 170W |

>$800 | |||

| EPYC 7281 | 16 / 32 | 2.1 GHz | 2.7 GHz | 155W/ 170W |

>$600 | |||

| EPYC 7251 | 8 / 16 | 2.1 GHz | 2.9 GHz | 120W | >$400 | |||

| EPYC 7551P | 1P | 32 / 64 | 2.0 GHz | 3.0 GHz | 180W | >$2,000 | ||

| EPYC 7401P | 24 / 48 | 2.0 GHz | 3.0 GHz | 155W/ 170W |

>$1,000 | |||

| EPYC 7351P | 16 / 32 | 2.4 GHz | 2.9 GHz | 155W/ 170W |

>$700 | |||

AMD will have an entire portfolio of CPUs from the 8-core EPYC 7251 to the 32-core EPYC 7601 with prices ranging from under $400 to under $4,000 respectively.

EPYC Zen Architecture

AMD EPYC processors are powered by AMD’s new Zen microarchitecture, which we’re all relatively familiar with by now.

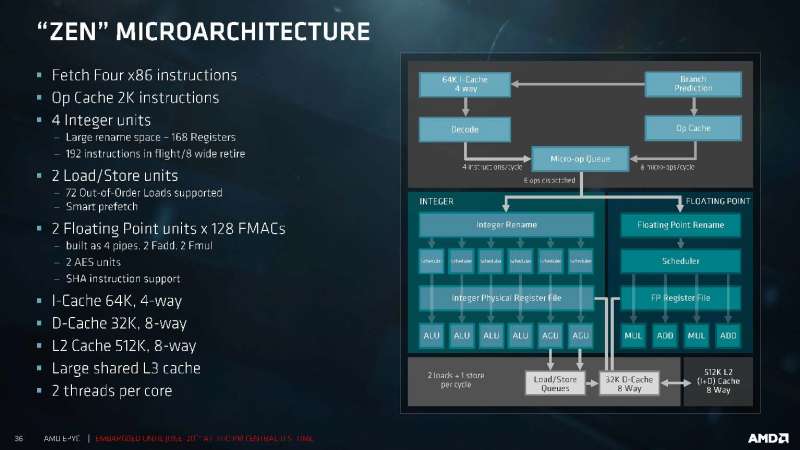

Zen cores have a front end which fetches/decodes instructions and converts them into micro-ops to be sent to the execution engine. At the front end, four decoders which are capable of processing four x86 instructions per cycle. Once decoded, these are sent to the micro-op queue to be processed. New for Zen is also a micro-op cache which can cache previously used micro-ops and send them directly to the micro-op queue. From here, the micro-ops are sent to the execution engine. Unlike newer Intel architectures which use a unified scheduler, Zen cores continue to use separate pipelines for integer and floating point operations.

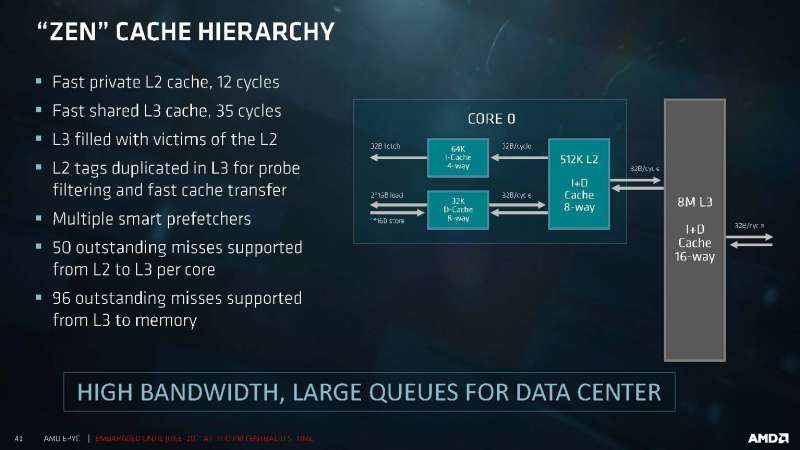

As for the memory subsystem, each core features a 512KB L2 cache, 32KB D-cache (Data Cache), and 64KB I-cache (Instruction cache).

Each Zen core also has access to large 2MB L3 cache which is shared among a CCX (CPU Complex). Each CCX contains four Zen cores.

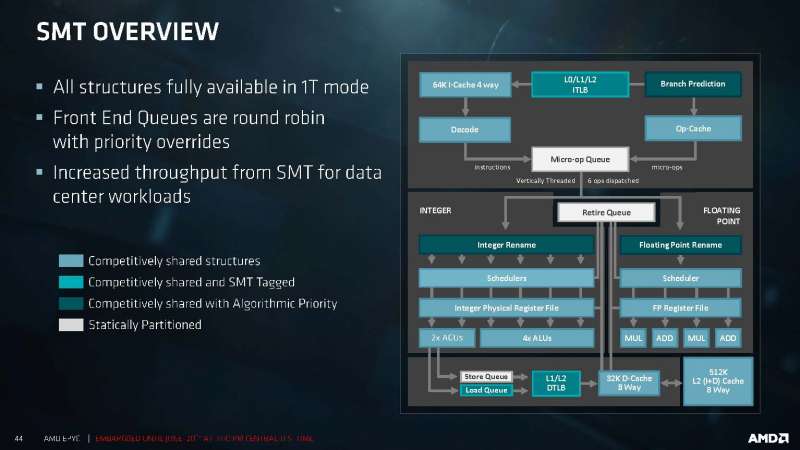

Zen cores also feature Simultaneous Multi-Threading (SMT) which allows each core to process two threads.

AMD also significantly updated the instruction set within the Zen architecture to now include ADX, RDSEED, SMAP, SHA1/SHA256, CLFLUSHOPT, XSAVEC, MWAIT C1, and AMD exclusive CLZERO and PTE Coalescing. AMD will also bring new virtualization instructions such as data poisoning, AVIC, nested virtualization, SME, and SEV. The virtualization instruction set will be particularly beneficial as AMD claims latency reductions of 50% in typical virtualization performance compared to AMD’s last generation Bulldozer architecture based CPUs.

Infinity Fabric

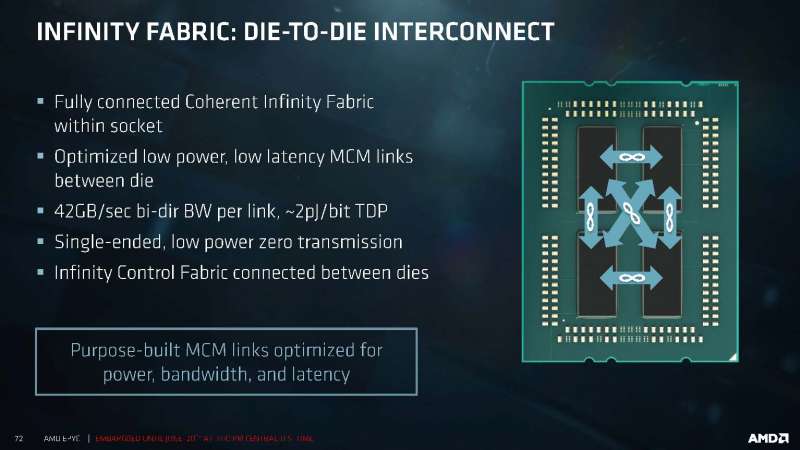

As mentioned previously, AMD’s EPYC processors aren’t single die monsters but rather contain four dies interconnected by their new high performance Infinity Fabric interconnect. The reason AMD chose this path is while an interconnect may not be as fast as creating a 32-core die, it’s a lot easier to manufacture multiple 8-core dies rather than a single 32-core die. With improved yields, AMD is able to lower cost and reduce the difficulty of manufacturing substantially.

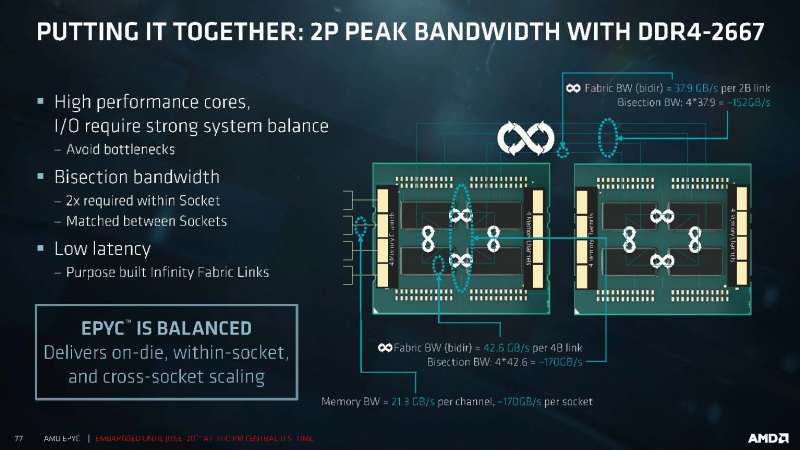

In EPYC, each of the four dies are interconnected to each of the other dies via three Infinity Fabric links to minimize latency. Bandwidth between each of the Infinity Fabric links clock in at 42GB/s bi-directional putting total bandwidth at 126GB/s. Power consumption per bit transferred is rated at just 2 picojules.

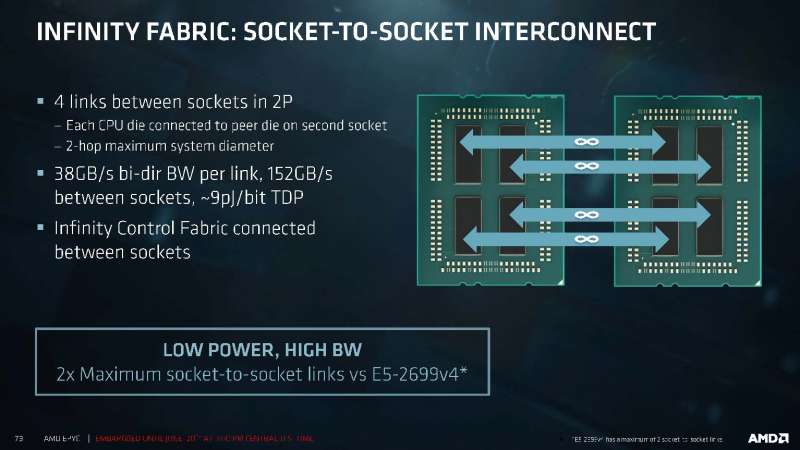

In a 2P system, there are four Infinity Fabric links established between the two sockets. To minimize latency, AMD has ensured that any data passing through the system will require a maximum of two hops to get from point A to point B. Each of the links provide up to 38GB/s bi-directional bandwidth providing up to 152GB/s total bandwidth between sockets. Power consumption per bit transferred is rated at just 9 picojules.

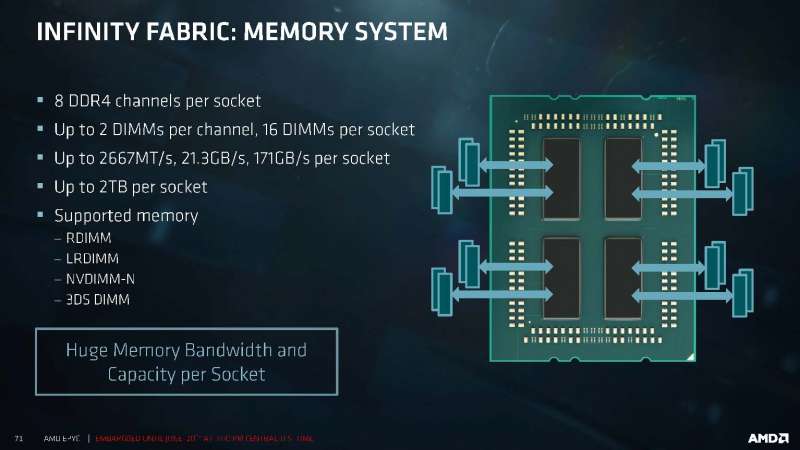

The entire lineup of EPYC processors will feature support for up to 2TB DDR4 per socket. EPYC will also support a wide range of memory which include RDIMM, LRDIMM, NVDIM-N, and 3DS DIMM. Using DDR4-2667, EPYC will be able to achieve a massive 171GB/s memory bandwidth per socket (21.3GB/s per channel).

Here’s an overview of total bandwidth offered by each of the links.

Platform and Subsystems

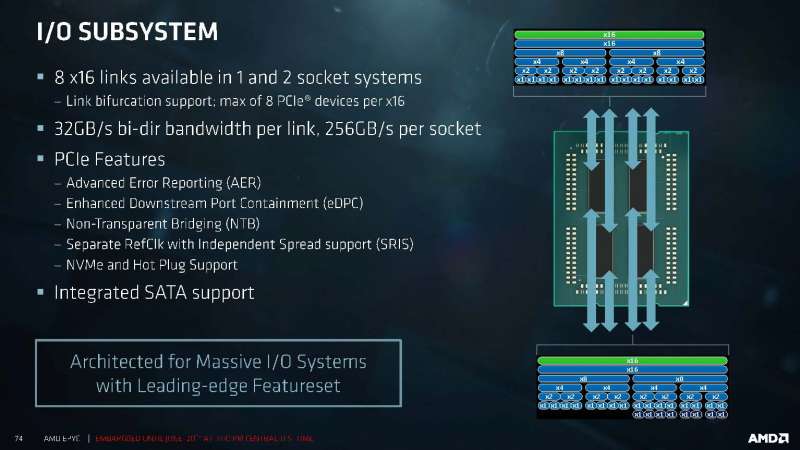

As there are four dies on each EPYC processor, this provides eight x16 PCIe links for a total of 128 PCIe lanes. At 32GB/s bi-directional bandwidth per link, this provides a total of 256GB/s bandwidth per socket.

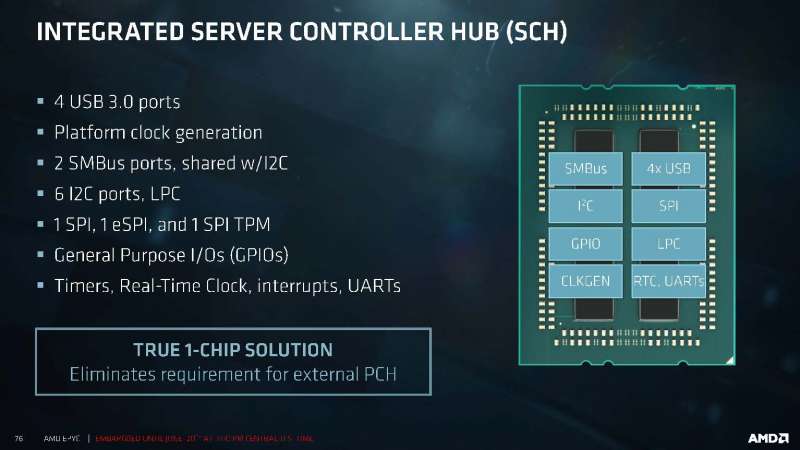

Integrated into the processor is also the controller hub which removes the need for an external controller hub, traditionally known as the PCH.

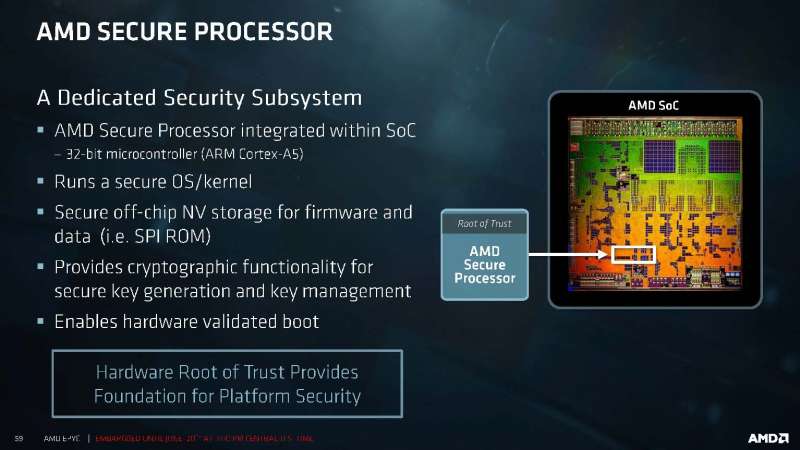

Secure Processor and Memory Encryption

Security has become a major concern recently which has prompted AMD to introduce two new hardware based encryption technologies with EPYC.

First is AMD’s Secure Processor technology. Secure Processor features a separate ARM Cortex-A5 based processor embedded into the SoC for secure key generation, key management, and to enable hardware validated boot.

Second is Secure Memory Encryption (SME) and Secure Encrypted Virtualization (SEV). SME uses a single key to encrypt system memory protecting against physical memory attacks. This is beneficial for those who want to ensure that the server isn’t being tampered with from the outside. SEV on the other hand encrypts the hypervisor and the VMs (or group of VMs). This isolates the hypervisor and prevents it from accessing encrypted VMs. This is beneficial for shared server environments to ensure security between VMs as well as between hypervisors and VMs.

Performance

Ultimately what does all this engineering boil down to? Better performance at a lower pricepoint compared to its competition.

According to AMD, EPYC will beat Intel Xeon significantly on both price and performance by significant margins. In internal benchmarks, the EPYC 7601 beats the Intel Broadwell E5-2699A by up to 47% in integer performance, 75% in floating point performance, and has 2.5x the memory bandwidth.

But it’s not just the EPYC 7601. AMD claims all of their new EPYC chips beat out their Intel counterparts between 23-70%.

EPYC Ecosystem

Supporting AMD’s EPYC platform are numerous enterprise software and system vendors which include everyone from DellEMC to HP Enterprise to Samsung.

Final Thoughts

Overall, AMD’s showing is nothing short of EPYC, but while it appears that AMD is ready to begin fulfilling orders, it’s difficult to say whether AMD will find success in the Intel dominated marketplace. Business and enterprise customers are notoriously conservative when making purchasing decisions and AMD may find it difficult to persuade customers to move from a tried and true platform to a relatively new albeit significantly cheaper one. While we are hopeful, as they say in the IT world, “No one ever got fired for buying Intel”.