[section label=”Introduction”]

ZOTAC AMPs it up!

When NVIDIA first announced the Pascal-based GeForce GTX 1080 and GTX 1070 graphics cards back in May, it was very clear that they had a pair of winners on their hands. Fast forward four months to today, and it seems that the company is still commanding the high-end GPU market with no competition expected from rival AMD until early-2017. With that in mind, anyone in the market for a high-end graphics card really only has two options – the GeForce GTX 1080 or the GeForce GTX 1070.

When NVIDIA first announced the Pascal-based GeForce GTX 1080 and GTX 1070 graphics cards back in May, it was very clear that they had a pair of winners on their hands. Fast forward four months to today, and it seems that the company is still commanding the high-end GPU market with no competition expected from rival AMD until early-2017. With that in mind, anyone in the market for a high-end graphics card really only has two options – the GeForce GTX 1080 or the GeForce GTX 1070.

Today, we’re taking a look at the latter or more specifically, the ZOTAC GeForce GTX 1070 AMP! ZOTAC is a company who’ve been producing GeForce graphics cards for nearly a decade and have recently been making quite the name for themselves in the enthusiast and overclocking communities with the likes of their AMP! series, which usually offer superior cooling and higher quality power delivery systems than those found on reference designs.

While there might not be much competition in the high-end segment coming from AMD, there’s still an immense amount of competition between NVIDIA’s various partners to deliver the most appealing custom cooling and board designs for NVIDIA’s products. With giants like ASUS, Gigabyte, and MSI commanding as much marketshare as they do, it might seem like an uphill battle for a company without the same brand strength as ZOTAC to stay in the game, but they’ve managed quite well over the years and have really begun to stand out of the crowd with their latest products.

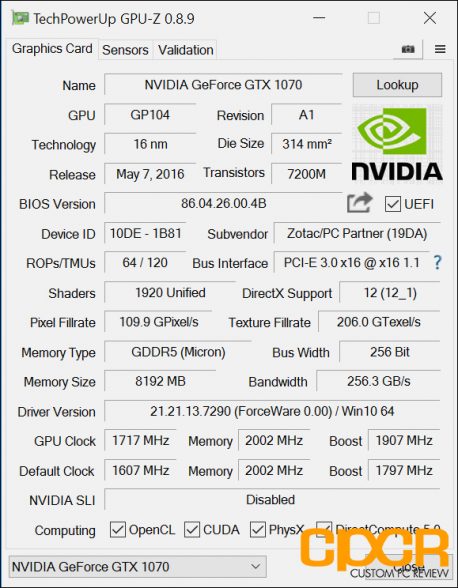

NVIDIA GeForce GTX 1070 Specifications

*ZOTAC non-reference specifications highlighted in bold.

| GPU | GTX 1080 | GTX 1070 | GTX 980 | GTX 970 |

|---|---|---|---|---|

| GPU Core | GP104-400 | GP104-200 | GM204 | GM204 |

| Architecture | Pascal | Maxwell | ||

| Fabrication | TSMC 16nm | TSMC 28nm | ||

| Core Clock | 1607 MHz | 1506MHz (1632) MHz | 1126 MHz | 1050 MHz |

| Boost Clock | 1733 MHZ | 1683MHz (1835) MHz | 1216 MHz | 1178 MHz |

| CUDA Cores | 2560 | 1920 | 2048 | 1664 |

| Texture Units | 160 | 120 | 128 | 104 |

| ROPs | 64 | 64 | 64 | 64 |

| Framebuffer | 8GB | 8GB | 4GB | 4GB |

| Memory Type | GDDR5X | GDDR5 | GDDR5 | GDDR5 |

| Memory Interface | 256-bit | 256-bit | 256-bit | 256-bit |

| Memory Clock | 10 Gbps | 8.0 (8.2) Gbps | 7.0 Gbps | 7.0 Gbps |

| TDP | 180W | 150W (220W) | 165W | 145W |

| Launch Price | MSRP:$599

Founders: $699 |

MSRP: $379

Founders: $449 |

$329 | $429 |

Taking a look at the chart above, we can see that pricing for the GTX 1070 is quite a bit more expensive than the previous generation GTX 970, with the MSRP raised by $50 from $329 to $379. This alone would not be a huge deal breaker considering the performance increases offered by the Pascal architecture, but unfortunately as we’ve seen over the past few months, that MSRP pricepoint may as well be a unicorn. Average pricing for the GTX 1070 is easily north of $400 in the $430 range due to constrained supply issues. The ZOTAC GeForce GTX 1070 AMP is currently priced at $439, putting it right in line with other custom GTX 1070s. That said, you do get higher factory overclocks than most custom GTX 1070 cards under $450 at 1632 MHz on core clocks and 1835 MHz on boost clocks.

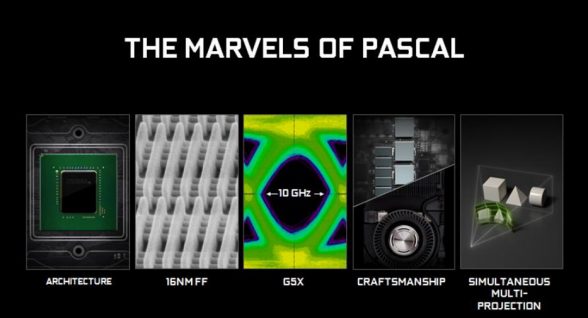

Pascal Architecture Overview

This being our first review of a NVIDIA Pascal-based graphics card, it’s probably worth quickly going over some of the highlights of the Pascal architecture. The new architecture brings with it a lot of new features and improvements over the previous-generation Maxwell architecture including an all-new 16nm FinFET manufacturing process which allows for an even greater number of transistors to be packed into a smaller die area (7.2 billion, in the case of GP104), allowing for a 1.5x increase in power efficiency while providing up to 70% more performance than Maxwell-based solutions.

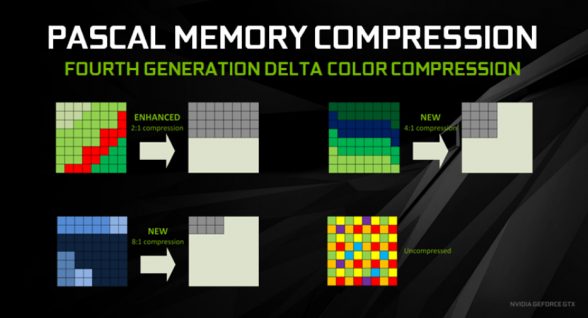

Pascal also introduces NVIDIA’s 4th generation Delta Color Compression, which allows for an up to 20% increase in effective memory bandwidth by allowing the GPU to calculate the differences between pixels in a given block and stores the block as a set of reference pixels along with the delta values from the reference. If the deltas are small then only a few bits per pixel are needed. However, if the packed together result of reference values plus delta values is less than half the uncompressed storage size, then delta color compression succeeds and the data is stored at half size.

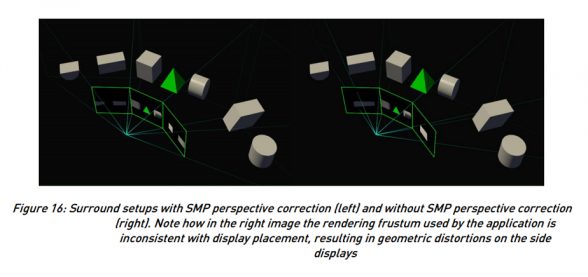

Another huge addition to Pascal is NVIDIA’s Simultaneous Multi-Projection or SMP. This new hardware feature allows for visual enhancements with a variety of different display technologies including triple-monitor NVIDIA Surround gaming and VR. A more in depth article on the subject may be coming down the road, but in short it allows for developers to render an entire scene in a single pass, and then map it to multiple viewpoints with the proper perspective. For VR, this allows for an effective 2x gain in performance by eliminating the need to render the scene twice to accommodate both eyes. For multi-monitor configurations, it can help to eliminate the visual anomalies which occur from angling the left and right display toward the user, although there are no performance increases in this scenario.

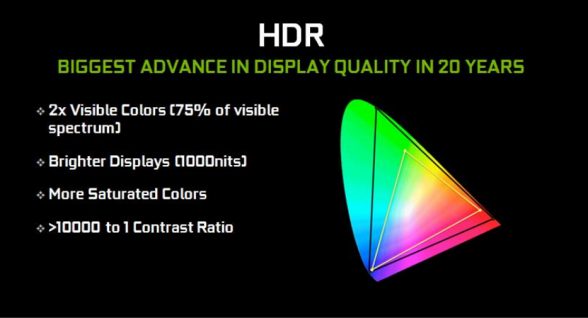

Another visual enhancement introduced with Pascal is supported for HDR or High Dynamic-Range displays. This feature comes along with Pascal’s support for next-generation display connectivity such as DisplayPort 1.4 and HDMI 2.0.

Now that you’ve got an idea of some of the new technologies that launched with the release of Pascal, let’s take a closer look at this beastly graphics card.

[section label=”A Closer Look”]

A Closer Look at the ZOTAC GeForce GTX 1070 AMP!

Starting with the packaging for the ZOTAC GeForce GTX 1070 AMP! Edition, we get a cool yellow and black design which perfectly matches the theme of the company logo.

Included in the packaging, we get a user manual, quick installation guide, a driver installation disc (don’t use this, download the latest drivers from the GeForce website guys), and a nice ZOTAC case badge for showing off your brand preferences. There’s also a pair of dual 6-pin to single 8-pin PCIe adapters, in case your power supply lacks the required PCIe power connectors. We usually don’t recommend using these types of adapters, unless it is a temporary fix. Still, it is always nice to see them included in the package.

Finally, we get a look at the graphics card itself, and boy does it look nice. The ZOTAC GTX 1070 AMP! features the company’s IceStorm cooling system, which includes dual super-wide 100mm fans which are designed to push more air, while operating at lower RPMs for a quieter operation. In fact, these fans also employ what ZOTAC calls Freeze, which essentially just means they will turn completely off with the card is idling or not using much power and doesn’t need the extra cooling. The card measures just over 11.8 inches long, and features a standard dual-slot form-factor.

The ZOTAC GeForce GTX 1070 AMP! also feature’s the company’s Carbon ExoArmor, which is fancy talk for saying it has a carbon-fiber pattern located on various accents on the cooling shroud. It also has a nice gun metal gray finish on a mostly metal housing which features a kind of rough texture. All and all, it looks and feels very premium. The card also features ZOTAC’s Spectra LED lighting system which includes a variety of colors which can be applied to both the ZOTAC logo on the side of the card, as well as the two chevron-shaped LED accents located between the fans at the front.

Around back, we have a really cool looking premium backplate which features a gray and yellow design along with the ZOTAC logo. The backplate also wraps around the card extending to the back and the front side to help conceal the components underneath.

This one I’m a little torn with. Aside from the backplate, ZOTAC has designed a very color-neutral card which would fit in a wide variety of builds without clashing, however, the yellow accents on the backplate may make this card a deal-breaker for some especially for those who invested heavily into a certain color theme such as red/black or blue/black.

Taking a look at the rear I/O, we have the fairly standard 3x DisplayPort 1.4 (HDR-certified), 1x HDMI 2.0, and 1x dual-link DVI- connector.

At the other end of the card, we have 2x 8-pin PCIe power connectors, which is one more than the reference/Founder’s cards require. This would theoretically allow for more power and thus overclocking headroom however, we think it still might be a bit overkill for a card such as this one.

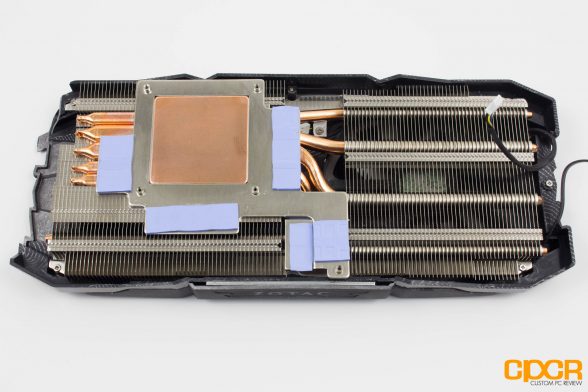

Tearing down the card we get a better look at the cooling system which consists of a split, wide aluminum fin array which is connected by five variably sized copper heat pipes that draw heat from the copper base.

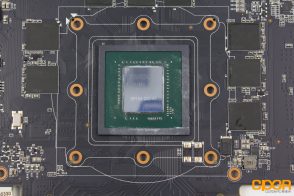

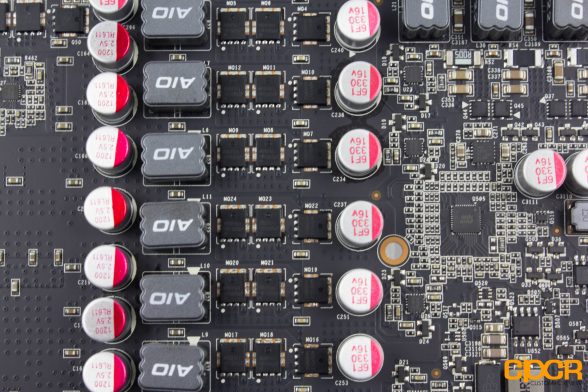

Moving onto the PCB, we get a fully custom layout with our GP104-200 GPU at the heart. Surrounding it are 8x 1GB Micron GDDR5 memory chips, which are different from the Samsung memory chips we’ve seen these cards carry in other reviews. This could mean that there are versions of the card with both types of memory, which shouldn’t really have any effect on the end-user but may have different overclocking results.

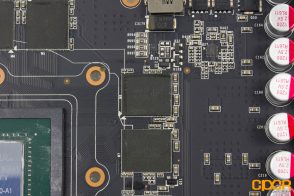

For the VRM, we have an 8-phase power design which consists of high-quality MDU1511 MagnaChip Semiconductor MOSFETs along with a μP9511P UPI Semiconductor Corp PWM controller. This is actually the very same controller found on the NVIDIA Founder’s Editions of the GTX 1080 and GTX 1070.

[section label=”Testing Setup and Methodology”]

Testing Setup

Haswell-E X99 Test Bench

| CPU | Intel Core i7 5960X @ 4.2GHz |

|---|---|

| Motherboard | Asrock X99 OC Formula |

| Memory | Crucial Ballistix Elite 16GB DDR4-2666 |

| Graphics | N/A |

| Boot Drive | Samsung 850 EVO 500GB M.2 SSD |

| Storage Drive | ADATA Premier SP610 1TB SSD |

| Power Supply | DEEPCOOL DQ1250 |

| CPU Cooler | DEEPCOOL GamerStorm Captain 360 |

| Case | Phanteks Enthoo Pro |

| Operating System | Windows 10 Pro |

Special thanks to Phanteks, DEEPCOOL, Asrock, and Crucial for supplying vital components for this test bench.

Testing Methodology – 2016 Update 1.1

With major GPU releases from both AMD and NVIDIA that fully support the latest APIs such as DirectX 12 and Vulkan, and a number of new games available which take advantage of these new features, we decided it was a time to update our GPU testing suite to more accurately represent the modern gaming landscape. We’ll still be using FRAPS to log performance results in a number of DirectX 11 and OpenGL titles. However, we’ll also be using PresentMon, which is an open-source command-line tool that works very similar to FRAPS, but supports DirectX 12, Vulkan and UWP-enabled titles.

Like with any good graphics test suite, ours is constantly evolving to suit the current gaming landscape. With incremental changes adding new titles and benchmarks we believe are necessary. Update 1.1 brings with it the addition of 3DMark’s all-new DirectX 12 benchmark Time Spy, in addition to changing our DOOM (2016) testing to utilize the new Vulkan API instead of the older OpenGL. Now, you might ask why we opted not to test both and that is a good question. The decision comes down to the fact that in our experience DOOM in Vulkan runs better than in OpenGL on all modern hardware and we don’t see any reason to continue testing it in the inferior API mode. Of course, if you find this unacceptable, be sure to mention it in the comments down below and we will consider re-adding it in future tests.

The following games/benchmarks will be tested:

- 3DMark FireStrike

- 3DMark Time Spy

- Rise of The Tomb Raider (DX11 & DX12)

- HITMAN (DX11 & DX12)

- Ashes of the Singularity (DX12)

- Gears of War: Ultimate Edition (DX12)

- Grand Theft Auto V

- The Witcher 3: Wild Hunt

- Project CARS

- DOOM (Vulkan)

All titles will be benchmarked a minimum of three times per configuration with the average of those results being displayed in our graphs. Performance will be measured in average FPS as well as the 99th (1% low) and 99.9th (0.1% low) percentiles. Those results will be gathered from the frame time data that is recorded using both FRAPS and PresentMon, however they will be converted from milliseconds (ms) to frames per second (FPS) in order to simplify things. Data gathered from benchmarking tools is analyzed using FRAFS and Microsoft Excel.

All titles are tested at “High” to “Very High” settings at resolutions to be determined by the GPU’s market-segment. In the case of this review, it will be 1080p and 1440p.

[section label=”Overclocking with ZOTAC FireStorm”]

ZOTAC GeForce GTX 1070 AMP! Overclocking

Initial Boot

ZOTAC FireStorm

Using ZOTAC’s own Firestorm software, which is a purpose-built application for managing and monitoring the GPU’s fan speed, temperatures, clock speeds and lighting features, we were able to get a 220MHz overclock on our GPU clock, which gives us a boost clock of 2,017 MHz, however, with the way that Pascal’s GPU Boost 3.0 works, it can be much higher based on temperatures, voltage TDP and load. In our experience, it reached as high as 2,088 MHz which is not bad at all, although some cards can break 2,100 MHz, ours was unable to get there. We decided not to touch the memory as 8.2 Gbps seems more than adequate, and in terms of real world performance, it’s much more effective to focus power to the GPU’s core clocks instead.

[section label=”Power Consumption and Temperatures”]

ZOTAC GeForce GTX 1070 AMP! Performance

Power Consumption

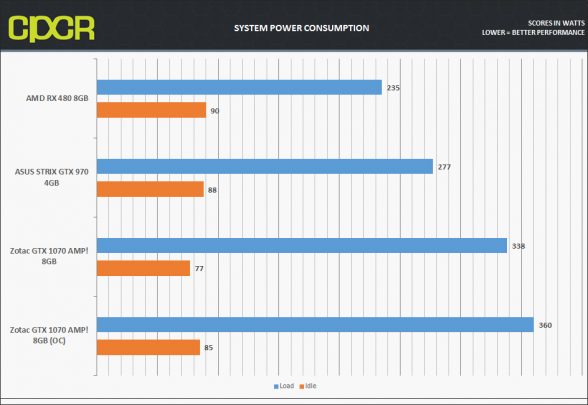

For power consumption testing, we’ll be measuring full system power while idle along with full system power with the graphics card running at full load using Furmark. All power consumption measurements will be measured at the outlet with a simple P3 kill-a-watt meter.

Power draw for the card is as expected, at stock settings, it is about 61 watts higher than our ASUS STRIX GTX 970 under load, overclocking extends this to 83 watts. While this is measuring total system power draw, we can estimate that the total power draw for the GPU itself is roughly 227W at stock and 249 while overclocked. This is quite a bit higher than the 150W reference TDP of the GTX 1070 however, these are right in line with ZOTAC’s specifications for the AMP! edition.

Temperature Testing

For idle temperature, we’ll be taking a reading when the graphics card is idle for 5 minutes after a cold boot. Load temperatures are taken after a full 30-minute burn using Furmark.

While some of you aren’t huge fans of Furmark as it creates an ultra heavy, unrealistic load on the graphics card, we feel like it’s a more useful tool as it differentiates between graphics cards that have extremely well-designed coolers and ones that simply have cooling solutions that simply pass the test, if you will. Most games these days generally don’t create enough of a load/heat to even exceed temperatures where the fans would spin up on most custom coolers so it’s difficult to adequately rank cooling solutions without using a tool like Furmark. During all tests, the GPU intake air temperature is approximately 25° Celsius.

Temperatures are also quite good for a card of this performance. At 75C under load at stock settings the card is able to stay quite cooler even under the most extreme, unrealistic loads such as FurMark. In reality, while gaming we can expect the card to stay in the mid-60s, which is what we experience most of the time. Once overclocked, it does jump up into the low-80s, but again, this is an extreme case scenario and even then, it’s still quite safe and shouldn’t start to throttle until temperatures reach the mid-90s.

[section label=6. 3DMark”]

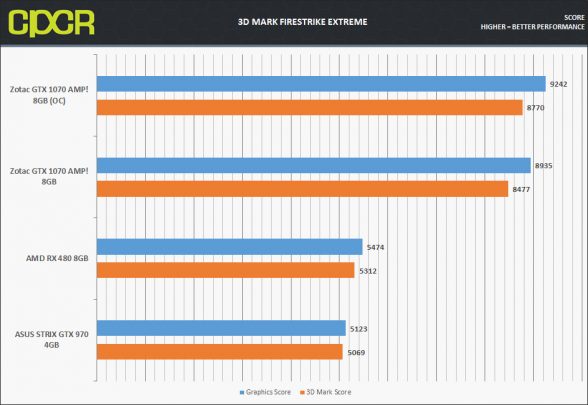

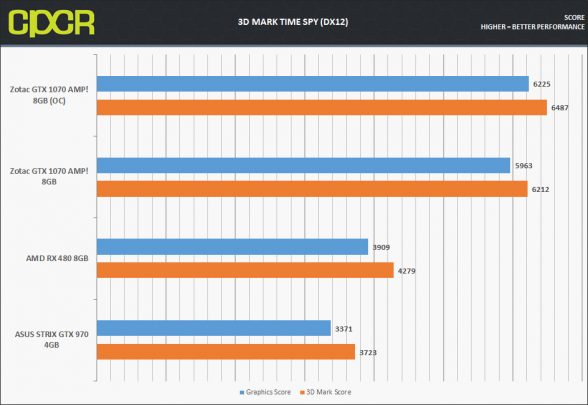

3D Mark

The new 3D Mark, now referred to as just 3D Mark, is Futuremark’s latest update to the popular 3D Mark series of benchmarks. The updated 3D Mark now includes multiple benchmarks for cross-platform support as well as updated graphics to push the latest graphics cards to their limits.

Fire Strike

Time Spy

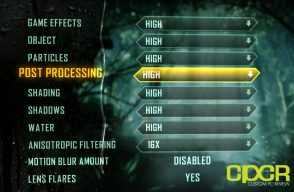

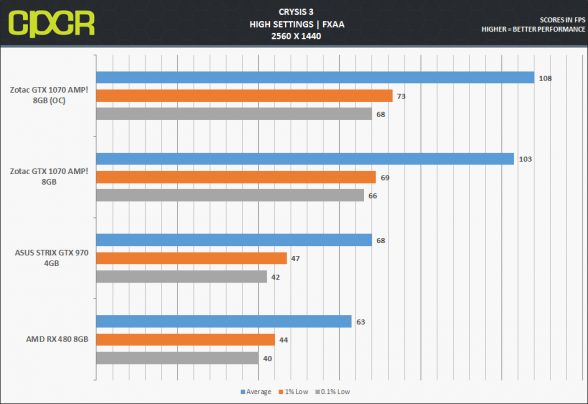

[section label=”Crysis 3″]

Crysis 3

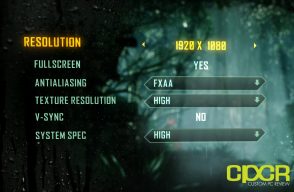

Crytek’s third installment of the legendary series Crysis, like its predecessors, still serves as one of the best looking, and most graphically intensive games to date. Offering photo-realistic textures, advanced lighting, and massive environments, which mix together lush organic plant life and foliage, with large, damaged and demolished buildings.

We test the game in a 60 second run during the first indoor area, which features a few firefights, explosions, and some stealth play. It’s not the most graphically intensive portion of the game, but it is one of the most easily repeatable for our purposes.

Settings

Results

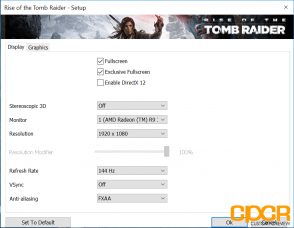

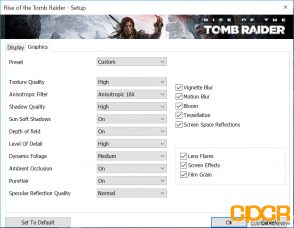

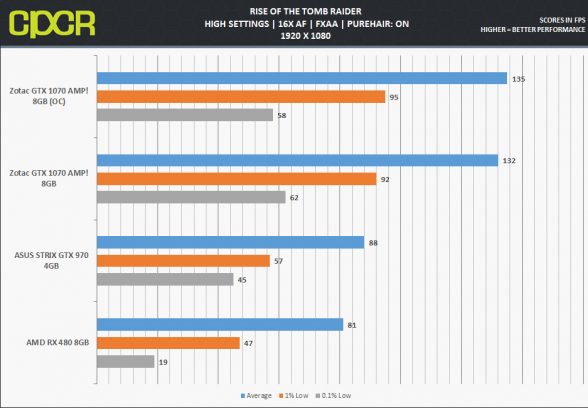

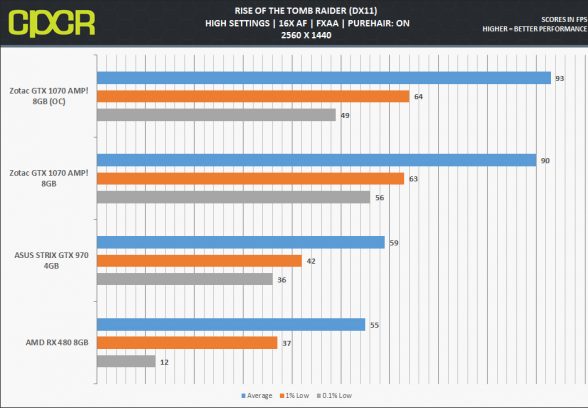

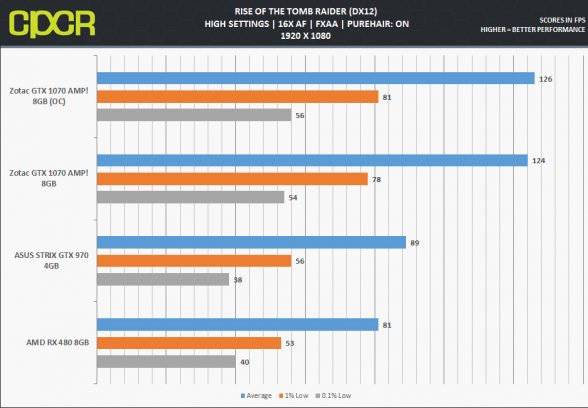

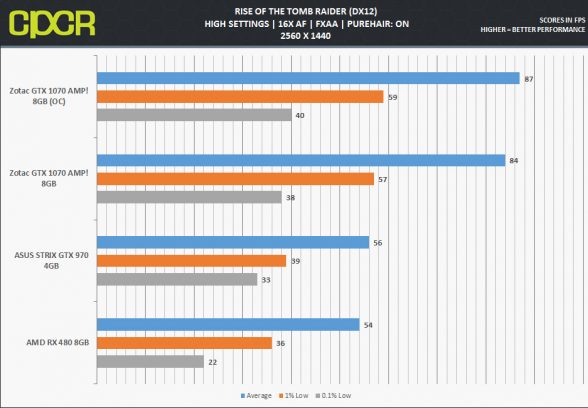

Rise of the Tomb Raider

The follow-up to Crystal Dynamics’ award winning Tomb Raider reboot, Rise of the Tomb Raider takes users across the world to a variety of exotic locales with even more tombs. With physically based rendering, HDR and adaptive tone mapping, deferred lighting with localized Global Illumination for realistic lighting, volumetric lighting enables God Rays and light shafts.

We test Tomb Raider using the game’s built-in benchmarking tool.

Settings

Results (DX11)

Results (DX12)

[section label=”Grand Theft Auto V”]

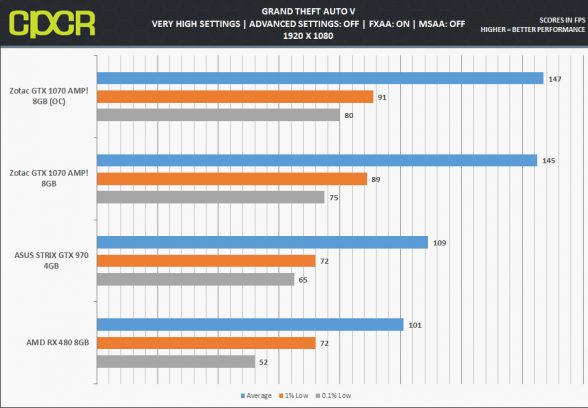

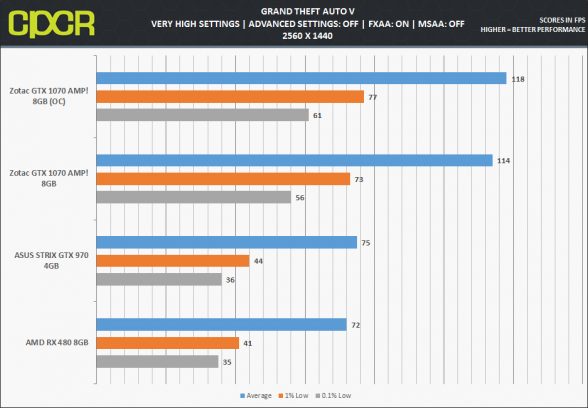

Grand Theft Auto V

The hotly anticipated PC release of Rockstar Games’ fifth installment of their Grand Theft Auto franchise easily proves once again that when it comes to open-world games, no one does it better. With lots of new features and graphical enhancements built specifically for the PC version, it’s no wonder it took them so long to optimize it. With advanced features such as tessellation, ambient occlusion, realistic shadows, and lighting, mixed with the largest open-world map in franchise history, this is one beautiful, well-optimized PC titles on the market.

We test Grand Theft Auto 5 using the last scene in the game’s built-in benchmarking tool.

Settings

Results

[section label=”The Witcher 3: Wild Hunt”]

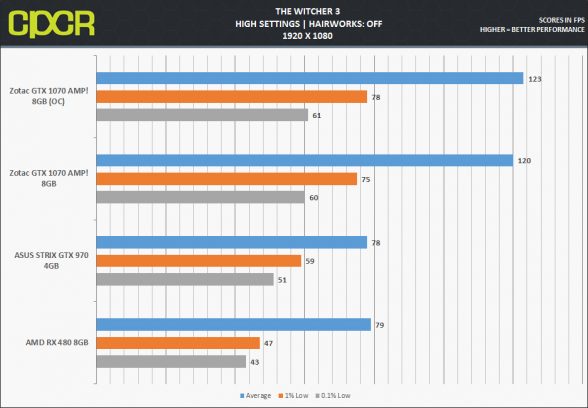

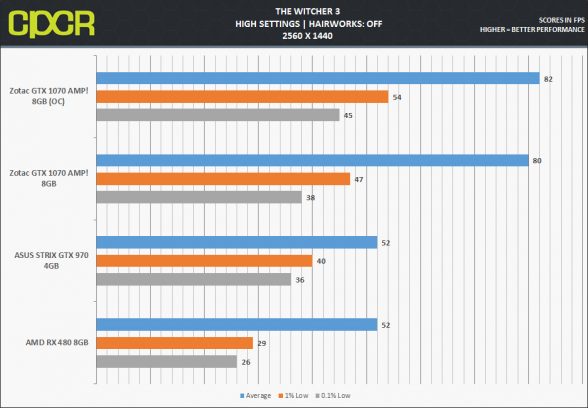

The Witcher 3: Wild Hunt

CD Projekt RED’s The Witcher series has long been accredited with being some of the most beautiful, and graphically demanding PC titles and its latest installment, The Witcher 3: Wild Hunt, is no exception. With beautiful, large open-world environments, detailed charter designs, high-resolution textures, and advanced features such as God Rays, and Volumetric Fog, in addition to a slew post-processing effects, this is definitely one impressive looking game.

We test The Witcher 3 with a 60 second lap around the first village you come across in the campaign, this is one of the best places for testing as it exhibits some of the game’s most graphically intense features such as God Rays, and Volumetric Fog. The test offers very little variance making it a very repeatable benchmark, which is difficult to find in most open-world games.

Settings

Results

[section label=”Project CARS”]

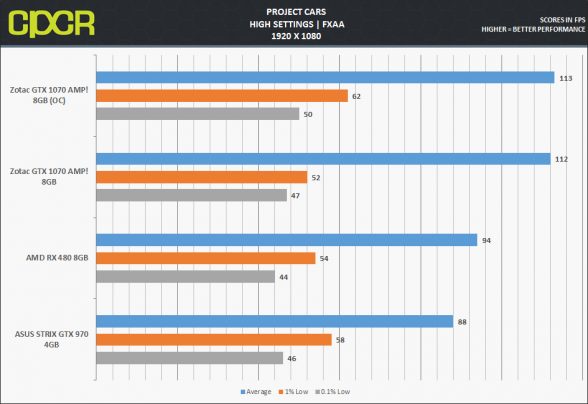

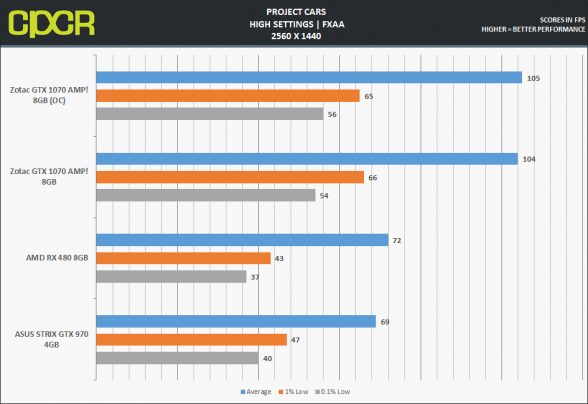

Project CARS

Next-gen racing simulator Project CARS was met with much anticipation when developer Slightly Mad Studios first announced the community assisted project. While it may not be the definitive choice for racing sim enthusiasts, it is easily one of the best-looking racing games available, featuring photorealistic vehicle models, real-world tracks, and realistic rain and weather effects. Despite some initial performance issues on AMD hardware which have been mostly patched, it is still one of the best racing simulator available for testing graphics performance.

We test the game using the in-game replay system, the race takes place on the Nürburgring race track, with fixed whether effects transitioning from overcast, to thunder storms.

Settings

Results

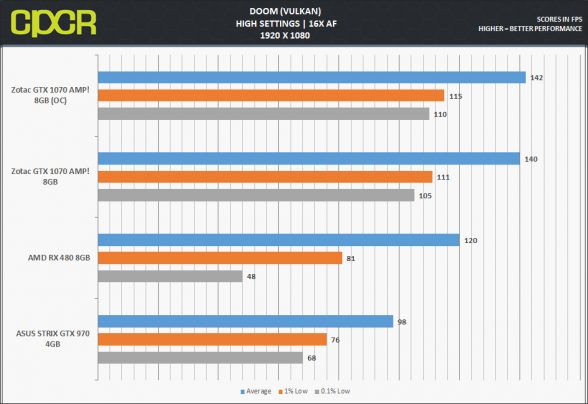

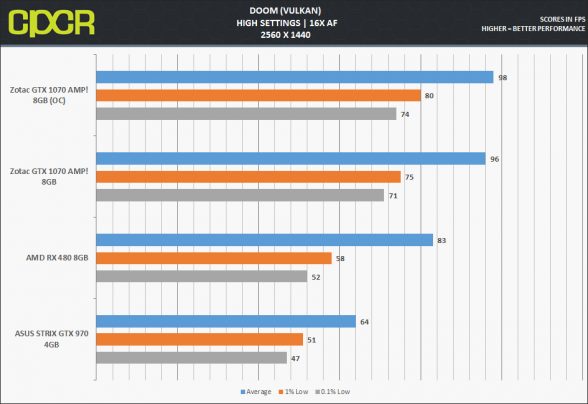

DOOM

The latest installment of Id Software’s legendary DOOM series. The new DOOM brings with it the latest graphics technologies and a fully uncapped frame rate thanks to the all new id Tech 6 game engine. With some of the best graphics available on PC today, DOOM brings to life its hellish environments and demons for a truly jaw-dropping experience.

We test DOOM using a 60-second run during the first mission of the game.

Settings

Results (Vulkan)

[section label=” HITMAN (DX11/DX12)”]

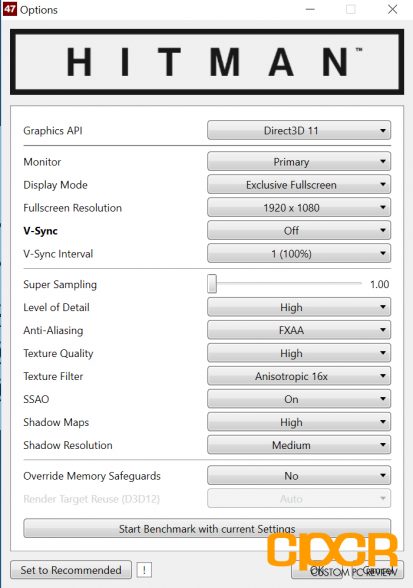

HITMAN

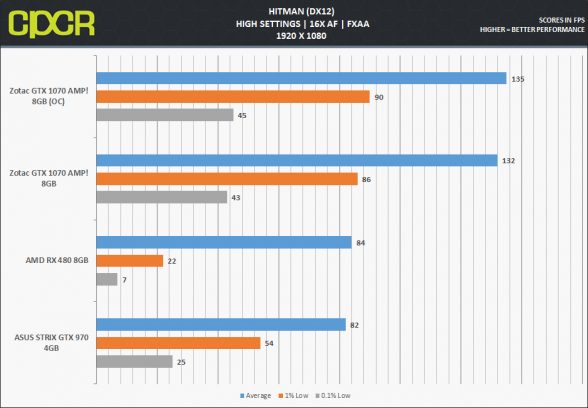

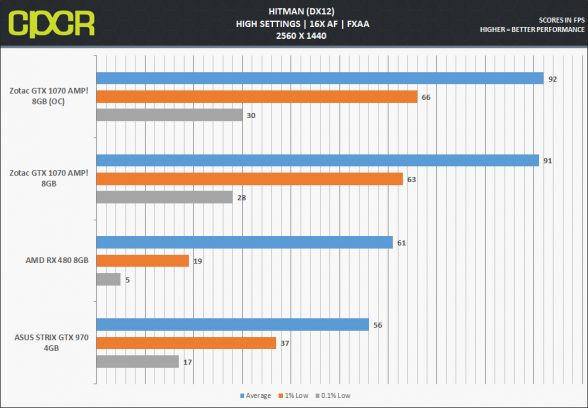

The follow-up to 2012’s Hitman: Absolution, simply titled HITMAN, the latest game in the series by developer IO-Interactive takes users on an episodic adventure to compete online against other players. The new game also brings with it support for Microsoft’s latest DirectX 12 API.

We test HITMAN using the game’s built-in benchmarking utility.

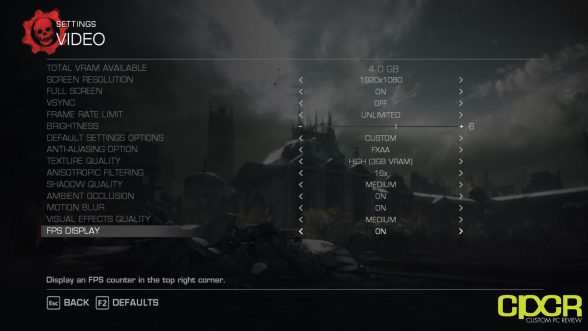

Settings

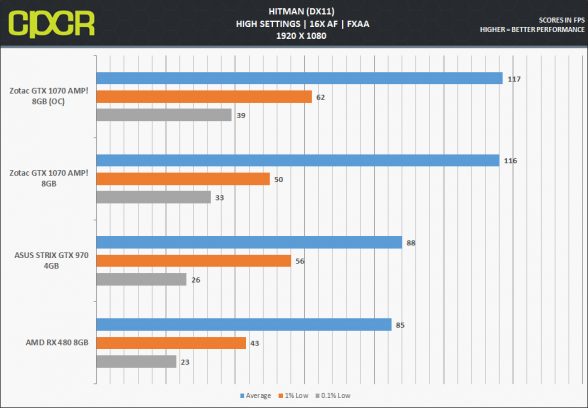

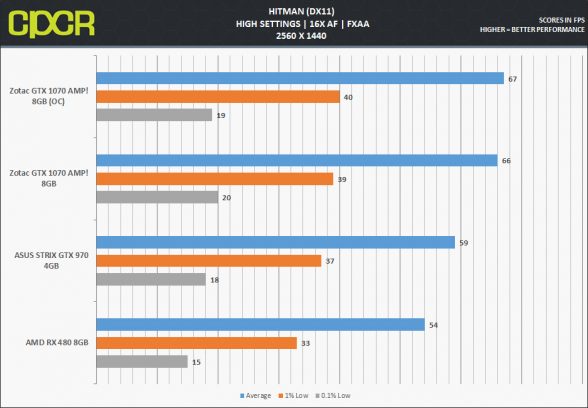

Results (DX11)

Results (DX12)

[section label=”Gears of War: Ultimate Edition (DX12)”]

Gears of War: Ultimate Edition

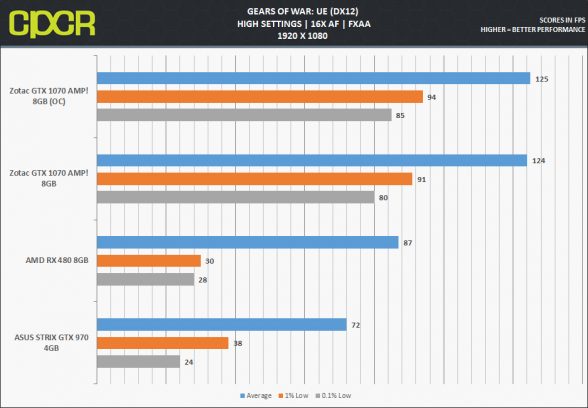

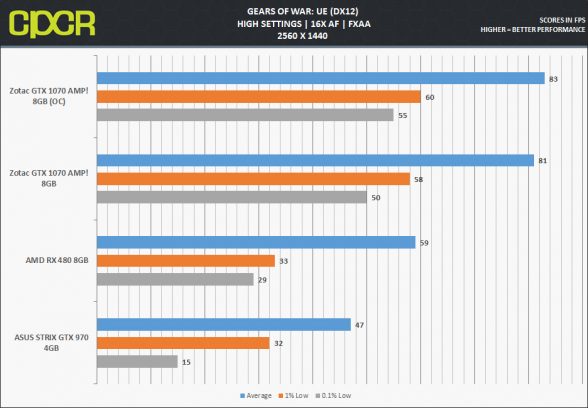

The remaster of the original Gears of War comes to PC, with completely updated graphics and reworked cinematics. Gears of War Ultimate Edition brings the classic, shotgun combat you know and love to the mouse and keyboard. The addition of DX12 support makes it a must have for any graphics benchmark.

We test Gears of War using the games built-in benchmark utility.

Settings

Results (DX12)

[section label=”Ashes of the Singularity (DX12)”]

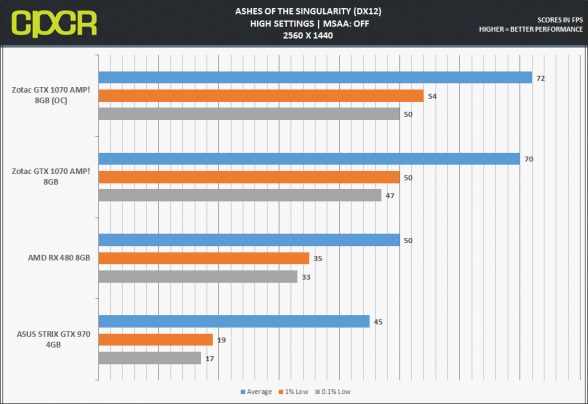

Ashes of the Singularity

Oxide’s Sci-Fi RTS game, Ashes of the Singularity is a staple in any graphics review as it provides one of the best implementations of latest DirectX 12 API, complete with Asynchronous Compute for improved performance and even explicit multi-GPU support which allows for mixing multiple different graphics cards for additional performance.

Settings

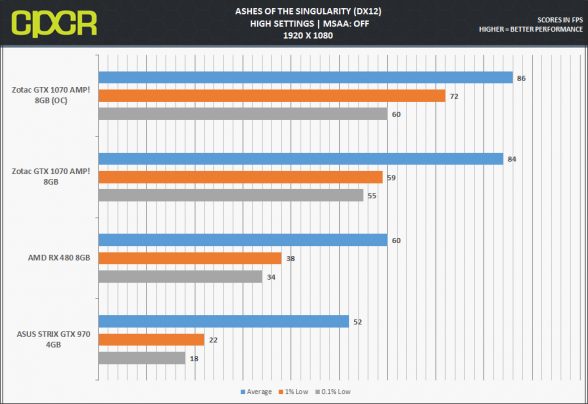

Results (DX12)

[section label=”Conclusions”]

ZOTAC GeForce GTX 1070 AMP! Conclusions

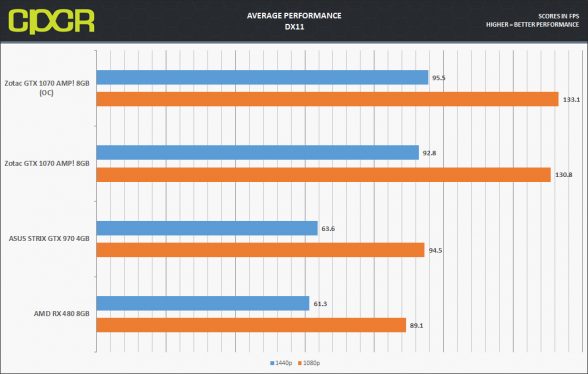

With all our testing done and the results in, it seems it is very clear that GTX 1070 is a huge step over the previous-generation GTX 970, with a performance increase up to roughly 50% in DirectX 11 titles. In DirectX 12/Vulkan, the story is even more dramatic, with an increase of up to nearly 62%. That’s a substantial generational increase and is basically unprecedented.

With all our testing done and the results in, it seems it is very clear that GTX 1070 is a huge step over the previous-generation GTX 970, with a performance increase up to roughly 50% in DirectX 11 titles. In DirectX 12/Vulkan, the story is even more dramatic, with an increase of up to nearly 62%. That’s a substantial generational increase and is basically unprecedented.

Usually, we’d recommend that users upgrade every bi-generationally, however, with Pascal it seems even current GTX 900 series owners will see worthwhile performance improvements making the jump to the GTX 10 series.

Now, let’s talk more specifically about the ZOTAC GTX 1070 AMP! Edition. While I haven’t had the pleasure of testing any other GTX 1070-bases graphics cards, I can tell you that this is still easily one of the best graphics cards I’ve tested, period. In terms of build quality, cooling performance, overclocking, and general stability, the ZOTAC GTX 1070 AMP leaves little to be desired. Aesthetically, I have my gripes with some of the design choices such as the yellow accents on the backplate. That being said, it is not the worse looking card out there, by far, and the yellow accents are a welcomed change of pace from the gamer-rific black and red color schemes which usually flood the market.

In terms of pricing, the ZOTAC GTX 1070 AMP! Edition is right in the middle of the pack, where barebones GTX 1070 cards are priced in the $400-$420 range, and higher-end, triple-fan, or liquid-cooled cards can command a price tag of $480-$500. The GTX 1070 AMP is priced at $439, which is fairly typical for a card of its feature-set, although it does tend to beat out its nearest competitors in terms of factory overclocks.

With all that said, it is fairly easy for me to recommend this card to anyone in the market for a high-end graphics card, while I wouldn’t say it is the best custom GTX 1070 available, it may just be the best for the money. Recommended!

Sample provided by: ZOTAC

Available at: Amazon

Hm, anyone think it’s fishy no details were given on memory OC limits and stability? It’s just so convenient to say “hey, memory doesn’t matter, let’s move on to the core.” I wonder, WOW, if it’s because this card had Micron memory and that every Micron-based GTX 1070 has memory voltage regulation issues? https://forums.geforce.com/default/topic/963768/geforce-drivers/gtx-1070-memory-vrm-driver-or-bios-bug-in-micron-memory-1070-cards-/1/

That this reviewer avoided the problem entirely tells me it’s possibly Nvidia is even telling reviewers not to touch on the issue. This is FUCKED UP.

I’ve not been in contact with NVIDIA at all. This sample was provided by Zotac directly and they never mentioned memory specifications. I was actually surprised to find Micron memory when other reviewer’s samples of this card featured Samsung. I even mentioned this in my tear down.

I didn’t look at memory overclocking because it doesn’t usually offer much of a performance improvement when you’re already dealing with such high clocks at stock. I also didn’t touch on it much in my RX 480 review which also has 8 Gbps memory, either. Truth is, when I couldn’t get more out of the GPU clock without instability, I chose not to try to push power to the memory and potentially make the GPU OC unstable.

We don’t really go too in-depth with GPU overclocking, I always go for the easiest “quick and dirty” overclock possible rather than spend much time on it and get results which are likely difficult for most users to recreate on their own.

Actually, the rx 480 is often bandwidth limited.

Your explanation is fair enough, just so happens you’re the first guy who reviewed Micron memory 1070. Coincidentally, you didn’t OC the memory.

I had planned on writing something along the lines of Tranta’s comment but I feel you’ve explained honestly.

I’ve not seen any evidence that the 8GB model of the RX 480 is usually bandwidth limited. I don’t even know of any cards which increase the memory clocks much past 8.0 Gbps. That said, the 4GB model which is often clocked at 7.0-7.2 Gbps does see some bandwidth limitations in many title leading to a performance gap between the two even where memory capacity shouldn’t be much of a factor.

That said, I do appreciate your comments. We always try to be as transparent as possible, but in the end there are things that just slip our minds when writing. it is never an intentional misdirection.

May I know what kind of graphics score were you getting for firestrike 1080p without including the physics test? For me personally both my rma-ed zotac 1070 amp! (micron chipped) edition was going at a score range of 18200-18300. Only my very first zotac was going at a graphics score of 19000 but died subsequently.

Just sold my zotac 1070 amp! edition. First one died after 2 weeks, rma-ed twice after due to temperature issue. Pretty sure that mine was micron chips due to the bad coil-whines. both the cards I rma-ed after however showed really graphics score of 18200-18300..which is 700 lower than what yours showed..was I just unlucky and drew the short straw of the stack (twice might I remind you) or is there something about micron chips that just isnt worth looking at?

None of the issues you’re talking about (especially where coil-whine is concern) should have anything to do with the type of memory used. Micron memory is used in a lot of different graphics cards and they rarely face any issues.

I’m sorry about your poor experience. Unfortunately, this stuff does happen from time to time, with any company.

Thank you for enlightening me on certain aspects of micron. I am still quite a beginner and have only relied on reading comments from here and there. Anyway, I managed to sell my zotac amp! and changed to a zotac 1070 amp! extreme. My prayers got asnwered and my card came with the samsung gddr5 chips. Not that I dont believe in micron but I just wanna play safe and have a better chance at overclocking without having to flash any bios or so forth.

but thank you, I really do appreciate it!

Nice, you got lucky there! The first AMP! Extreme I received was faulty and the replacement I got is already starting to act flaky. I believe both of them had Micron memory, however, I’m not sure if that hasn’t any relation to the issues I’ve had. I swear the performance of the card has degraded since I’ve received it. Maybe I’m just paranoid but I swear some games ran smoother when I was running two GTX 760s in SLI. lol

Anyways, I’m considering sending it back and going with EVGA as I have previous experience with their cards and they’ve done me well so far. I hope I don’t have to go that far though.

Yeah I’d have to agree with @donnystanley:disqus on that one. Coil whine is usually something with the power delivery system. It’s just the sound of electricity passing through your components. The more electricity like when your GPU is under load, the louder the whine. Sometimes it may be due to a lower end PSU that doesn’t supply very good power, but since your new card doesn’t have that issue, it may just be the components on the cards you’ve owned doesn’t like your PSU or just luck of the draw I suppose.

As for memory, generally only overclocking is where you’d see the benefit on certain brands, but it really depends on the batch of memory. Sometimes some batches of GDDR5 from some manufacturer just turns out to be overclocking beasts while others won’t even go 10MHz over the factory clocked specs. Usually memory doesn’t cause graphics cards to die or cause coil whine though.

i bought 1070 mini playing everything max at 1920×1200